Abstract

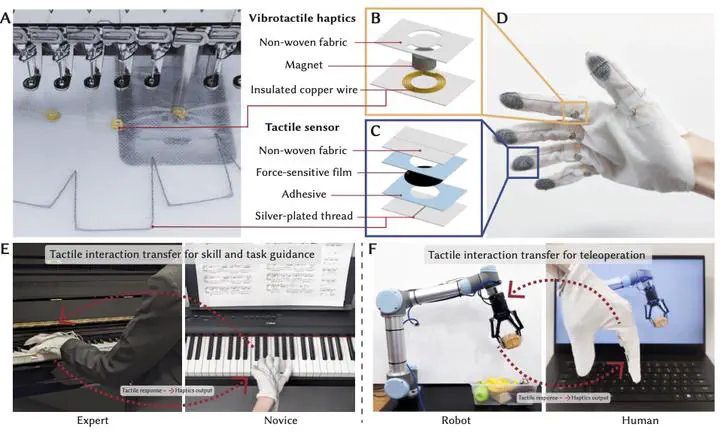

Human-machine interfaces for capturing, conveying, and sharing tactile information across time and space hold immense potential for healthcare, AR/VR, human-robot collaboration, and skill development. To realize this potential, such interfaces should be wearable, unobtrusive, and scalable regarding both resolution and body coverage. Taking a step towards this vision, we present a textile-based wearable human-machine interface with integrated tactile sensors and vibrotactile haptic actuators that are digitally designed and rapidly fabricated. We leverage a digital embroidery machine to seamlessly embed piezoresistive force sensors and arrays of vibrotactile actuators into textiles in a customizable, scalable, and modular manner. We use this process to create gloves that can record, reproduce, and transfer tactile interactions. User studies investigate how people perceive the sensations reproduced by our gloves with integrated vibrotactile haptic actuators. To improve the effectiveness of tactile interaction transfer, we develop a novel machine-learning pipeline that adaptively models how each individual user reacts to haptic sensations and then optimizes haptic feedback parameters. Our interface showcases adaptive tactile interaction transfer through the implementation of three end-to-end systems: alleviating tactile occlusion, guiding people to perform physical skills, and enabling responsive robot teleoperation.